Every day, Eagle Alpha helps guide data-intensive organizations towards the right datasets to answer their research question or fulfill their model requirements. One of the challenges with finding relevant datasets is assessing use case suitability among the wide variety of over 1900 datasets listed on the Eagle Alpha platform. Further complicating this, many datasets are difficult to categorize and data vendors often use unique vocabulary to describe their data, resulting in instances of poor performance for simple keyword-based searches.

A Semantic Approach to Search

With the increased focus on Generative AI in the last few years, one of the overlooked developments associated with Large Language Models (LLMs) is the improvements to large language embedding models. These embedding models allow for topic-based comparisons between words, phrases and even paragraphs and are increasingly performant enough to be used in real-time contexts.

Along with the structured categorical data captured on dataset profiles, the Eagle Alpha platform has a significant amount of “less-structured” text data, whether that be added directly to a field on the dataset profile such as the description, or via the text of a PDF attachment that data vendors can upload, commonly to provide use cases, documentation, and marketing materials for their products. With the progress in large language embedding models, accessing the rich insights from the thousands of attachments that vendors upload to their profiles has started to appear possible.

Organizing the Meaning of Text

The core concept of an embedding model is to take natural language input, such as a sentence or paragraph, and return a vector that describes the “location in space” of that text. The high-dimensional neighborhood surrounding any given text input is made up of concepts that have been learned by the model as relating to one another. Calculating the distance between two vectors provides a straightforward way to assess how related any given text is to any other text in the index. While this sounds simple when thinking about two vectors, performing this computation at scale in real-time using the full contents of phrases, sentences and paragraphs from the Eagle Alpha platform brings up some challenges.

First, let’s start with the data. When compared with many of the products listed on the Eagle Alpha platform, text data can be significantly messier even when just considering a “rich text” HTML field on a data profile. Users completing a profile can add bold text, a bulleted list, links, section titles, even tables of numbers. Because we are using a commercially available embedding model (Amazon Titan Embedding v2 model hosted on AWS Bedrock in this case), we can only assess the performance of these variations of text layout by trial and error.

Vector outputs can be highly dependent on the cleanliness and completeness of the input text. From qualitative assessments, fully formed sentences are the best performing text representations, while short phrases or individual words often result in neutral “global neighborhoods” that are equally nearby to the rest of the vector space. Due to this, we focused our efforts on efficient “chunking” of the raw text to form complete sentences with valid real words.

For example, we found axis values of plots were often found as phrases in Case Study PDFs when using simple space-delimiting parsers, therefore requiring further diagnostic filters on valid real words before the vector embedding step. Titles, untethered phrases in presentation slides, and bulleted lists of phrases were particularly challenging to parse and condense effectively into a sentence-like grouping for the embedding model to work well with.

Using Structured Representations where we have them

One of the other challenges we faced was how to incorporate a dataset profile’s structured tags into the vector “similarity” search. The structured tag information of the data profiles can be highly informative, and we didn’t want to “start from scratch” again by ignoring these available domain-specific data points. For example, all published data profiles are tagged to a Data Category following our alternative data taxonomy of 16 categories and 56 subcategories.

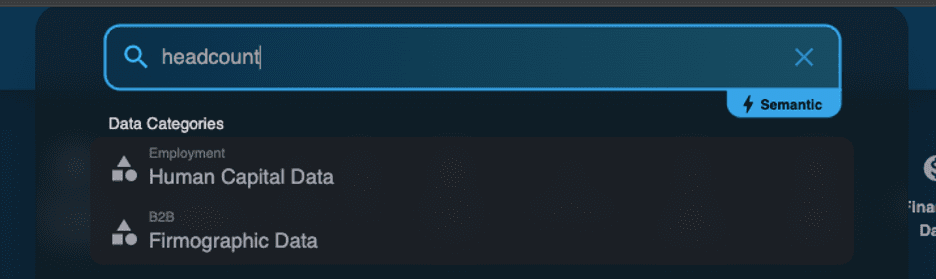

When a user types a query in the search box, before finding the most similar sentences from profiles and attachments, we first want to surface a suggestion to view datasets tagged to the category or industry sector most relevant to the query. To make this recommendation feasible, we embedded Eagle Alpha-written content pertaining to the data categories and sectors in a dedicated index. As the user types, the input text is vector embedded then compared to this categorical tag index, returning the most relevant suggestions in real-time. You can see below a successful set of real-time suggestions for the query “headcount”, relating it to both Human Capital category of Employment data and Firmographic category of B2B data.

We found this approach doesn’t always perform well for very specific “long-tail” tag values. When attempting to vectorize Countries using names and short descriptions, we found that the embedding model was susceptible to placing these relatively unique words in a neutral “global neighborhood.” It was remarkably challenging to stop the search input “Florida” from returning the country Fiji instead of the United States, and ultimately this led us to using a keyword-based suggestion approach for some of these long-tail tags. We continue to research utilizing semantic representations of fields and field values, as we think this is a very promising area for further improvements throughout the platform.

Infrastructure Setup

Achieving “as-you-type” level latency with search suggestions and semantic results required significant tuning of the index infrastructure used to store and compare the embedding vectors. For this work, we utilized an AWS-hosted OpenSearch cluster running the Lucene KNN engine with two dedicated indices: 1) an index for storing the vectorized text from dataset profiles and attachments, and 2) an index for storing descriptive vectorized text of tag options such as Data Category values. We utilized an approximate K-nearest neighbors estimation, as a brute-force estimation would be computationally prohibitive for a real-time response. To counter approximation noise, we selected a relatively large K value, rolling up individual mentions to a dataset-document level and ranking using a composite of the relevance of the mentions.

As noted, we utilized cloud-hosted, commercially available embedding models, and after assessing a few different embedding models, selected the Amazon Titan Embeddings v2 model, which offers three vector dimensions (1024, 512, and 256). We opted for the largest dimension vector size (1024) as the latency reduction from using a smaller dimension was outweighed by the overall latency volatility of using a cloud-hosted service.

A New Search Experience for Data: Some Examples

To demonstrate the pieces mentioned above working together, let’s look at a few example queries.

Searching for Oil in Oklahoma

A user might want to find datasets that indicate the oil storage capacity at the Cushing, Oklahoma site, a major US oil storage facility. As this user might be an Energy analyst with not a lot of time on their hands, they type a query “crude capacity in OK” instead of spelling out in detail “Cushing, Oklahoma” or even mentioning the word “oil”.

As the user types, the categorical suggestions start to recommend the Energy category of Business Insights data and the Oil & Gas Storage industry sector tags to filter datasets on. When the user presses Enter and performs a Semantic search, the top result is from the text of an uploaded Whitepaper mentioning “This dataset covers daily volume of crude oil stored in each of 339 floating-roof tanks (FRT) in Cushing, OK” while the second result is found in the profile description of Geospatial Insight’s TankWatch Cushing dataset: “The Cushing, Oklahoma oil storage site is monitored on a continuous basis enabling detailed measurement and highly accurate insight into possible changes in supply and demand”. While this is a relatively small leap, the vector search using the large language embedding model successfully related the abbreviation “OK” with Oklahoma, and related “crude” to oil without using any keyword-based searching.

Also recommended to the user (show above) is the Energy category of Business Insights data, with options available to learn more about Energy data on Eagle Alpha’s detailed Category Page, view all datasets in this category, and an option to “focus” this search on just content from datasets tagged to this category. This Focused Search is an example of functionality incorporating both the structured and unstructured content of the platform.

Hurricane exposure for commercial property

With an active hurricane season underway in the US, a user might be looking to assess property risk due to hurricanes for a publicly traded company’s franchise locations. The user might not want to give away the specific company they are interested in, so they query “commercial property hurricane exposure.”

As the user types, the categorical suggestions include Real Estate category of Business Insights data and the Weather category of Satellite and Weather data. When the user presses Enter and performs a Semantic search, the top dataset returned (SRS Global Physical Asset & Issuer Intelligence) shows relevant mentions from 4 sources including Whitepaper, Case Study, and Marketing materials.

As the top source (a Case Study PDF) itself contains 12 mentions related to the input query, the user can open a view to explore the mentions chronologically in the document, with page numbers where the text appears and a relevance score for each mention to prioritize sections of the document to review (as shown below).

In the above shown top mention for the Whitepaper source, the embedding model also correctly related the query term “hurricane” to the sentence “The climate risk impact profile covers tropical cyclones, inland and coastal flooding, and wildfire, the primary hazards of business interruption and damage” which does not directly contain the word, but rather a synonym.

More to Come with Semantic Search!

We are excited to start seeing our clients use this new search experience, as we hope it enhances the search and prioritization of data with the right information and nuance to meet the variety of research questions our clients are asking.

This project has opened many possibilities for us for future additions applying large language embedding models to our platform, and we can’t wait to share more developments as we release them to the platform.

We imagine many of the clients we work with are currently working on building similar systems that take advantage of the recent advancements in large language models, so we invite you to reach out and ask us anything about the architecture or design decisions we described above.

And finally, we love to hear feedback good, bad, and ugly from our users, so please send it our way!